Building on the humble beginnings of my bike synthesizer project, I got the chance to develop it into a live performance for CYFEST 16 in Yerevan with my boi Rob. We called our project Cadence Clock: Rhythm of the Streets. In this post I’ll give an overview of our technical setup and decisions, and what went right and wrong.

Poster by Bogdan Boichuk:

Cadence Clock: Rhythm of the Streets is an immersive audio-visual experience navigating city streets on a fixed-gear bicycle. By equipping an otherwise minimalistic track bike with sensors, we attempt to augment the viewer’s senses to translate the visceral, connected, and synchronized flow of fixed gear cycling through city traffic into perceivable sights and sounds, archiving these feelings. The rider’s movements, navigation style and decision making — modulated by the city’s traffic patterns, geography, and infrastructure are emphasized through audio-visual synthesis and weaved into a story exploring the rhythm of our city streets, who they are for, and how they can be reclaimed.

Promo video:

The Setup

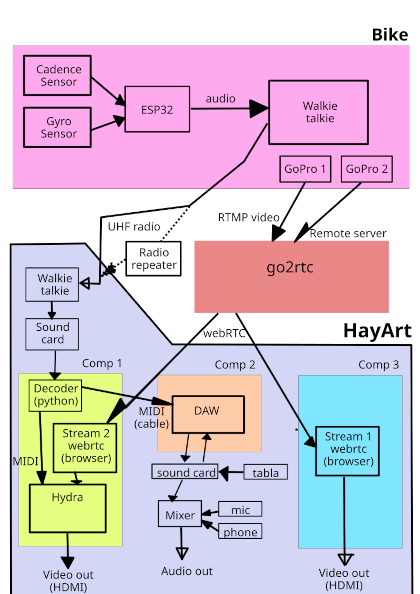

Our goal was a live audio-visual performance involving riding a track bike through the city, with sounds generated and livestreamed video modulated by the bike’s movement. Our extremely overcomplicated setup looked like this:

On the bike, we had an ESP32 microcontroller reading from two sensors - the cadence module and the accelerometer module. The cadence module is a Hall-effect sensor detecting the rotation of eight magnets glued to the chainring which acts like a clock for generating sounds (cadence clock, get it?). The accelerometer module is an MPU6050 attached to the handlebars that captures their movement. Two video feeds (a PoV stream from Rob, and a third person view from our friend Savva riding behind) were streamed using GoPros.

I initially really wanted to minimize latency and possible disruptions because of internet dropping, and tried to stick to analog signals for both the sensor data and the video. For the sensor data, I ended up encoding it into audio as a sum of gated sine waves for the discrete cadence signal and frequency-shifted sines for the accelerometer signal, which was then decoded into MIDI with a python script at the receiving end. This encoding was done by essentially using the ESP32 as a polyphonic synthesizer, with the audio output transmitted with a UHF radio walkie-talkie.

For transmitting an analog video signal, we first experimented with a system designed for flying drones and model aircraft. While the latency and video quality were great, we unfortunately didn’t account for how strongly this radio signal would be attenuated by obstacles in an urban environment when both the transmitter and receiver are at ground level. We then decided to scrap the analog video idea and stream directly from GoPros over mobile internet. The RTMP streams from the GoPros were decent, but latency was all over the place - we got better results setting up a go2rtc server as an intermediary and sending WebRTC video to the endpoints with < 2 second latency.

On the receiving end at the performance venue, once the sensor signals were converted into MIDI, these were fed into generative VCV rack patches coordinated by a DAW for sounds and used to modulate the video streams with hydra.

How’d it go?

Not quite according to plan… everything went great during the start of the performance, but then Murphy’s law struck and things started falling off.

- A couple of days before the performance, we realized the RF signals from the walkie-talkie transmission were causing strong interference on the ESP32, messing up the analog readings from the Hall sensor and I2C communication with the accelerometer module. With not much time to reliably figure out and test electromagnetic shielding, we ditched the walkie talkies and decided to transmit audio over a VOIP call which worked pretty well. However, during the performance we ended up using the VOIP client on a different (untested) OS, which resulted in wildly fluctuating audio levels (presumably due to some noise suppression feature) that our decoder couldn’t handle well. This messed things up and made our MIDI signals very unstable.

- We needed a stable bandwidth of ~8 Mb/s up for our video streams, which the mobile internet handled well during our tests. Sadly, the internet gods weren’t on our side on the day of the performance and the video streams kept dropping. The lag because of dropped frames would also keep accumulating, leading to synchronization issues.

- The individual parts of our setup worked great in isolation, but we lacked the diligent integration testing required to work out the kinks in such a complex interconnected system.

Excerpt from move2armenia’s video from CYFEST 16:

What did we learn?

- Don’t overcomplicate things.

- Our aversion to sending our sensor signals over internet was misplaced - a day after the performance I managed to send them from the ESP32 via its inbuilt WiFi modem as OSC messages and it worked perfectly, I regret not starting with that and overcomplicating things.

- While the image quality and stabilization of the GoPros was amazing, it would have made more sense to just use phones and a video call app specifically optimized for low latency and robustness.

Despite the setbacks, performing at CYFEST was an amazing experience overall. It was a huge challenge and I had to learn a lot from scratch as I went - from soldering and hardware protoyping to working with low-level audio. A few months ago it would have been hard to imagine we’d come as far as we did, and we’re very grateful to our curators Sergei Komarov and Lidiia Griaznova for their faith in us, and the team at sound enthusiastic community for their technical support during the performance.

This was the birth of Cadence Clock, and we’re very excited to see where it takes us next! Stay tuned.

Code

A messy repo with the code for the ESP32 bike unit, python decoder, and visualizations can be found here.